Ideally, your lexer should simply return slices over the input, wrapped in a struct with an enum tag to indicate what type of token it is. This may not always be possible if you're reading from a file if that's the case, consider using std.mmfile to memory-map the input into memory so that you can freely slice over the entire input without needing to handle caching/paging yourself. for returning tokens) as much as possible, avoid. (This is a common mistake by people who write lexers/parsers in D.)Ģ) Use slices of the input (e.g. If some object needs to be created frequently (e.g., tokens), try your best to make it a struct rather than a class, to avoid incurring allocation overhead and to decrease GC pressure. Some things to watch out for when writing high-performance string-processing in D:ġ) Avoid GC allocations as much as possible. So it's unsurprising that performance isn't the best. It does have a nice API, but for a truly fast lexer at the very least you need to compile the lexing rules down into a fast IR, which d-lex does not do.

I took a quick glance at d-lex, and immediately noticed that every rule match incurs a GC allocation, which is sure to cause a performance slowdown.

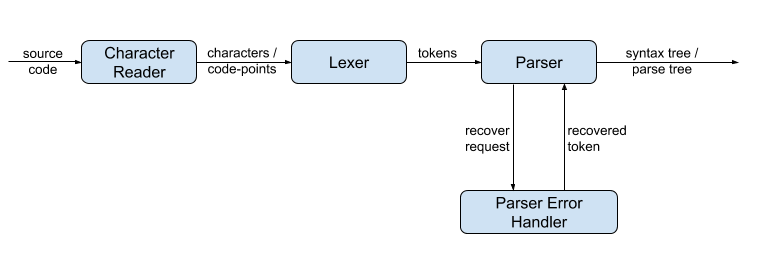

LEXER GENERATOR ALGORITHM CODE

Fortunately, you can interface with the generated C code quite easily from D. Unfortunately, AFAIK it does not support D directly it generates a lexer in C that you then compile. If you want a *really* fast lexer, I recommend using GNU Flex ( / westes/flex/). Of course, if the standard library (or its derivatives, such as mir) has features that could be of interest to me for this lexer, I am interested. I could of course write it by hand, but I would be interested to know what already exists, so as not to reinvent the wheel. Therefore, I wonder what resources there are, in D, for writing an efficient lexer. As the parser will only have to manipulate tokens, I think that the performance of the lexer will be more important to consider. I started writing a lexer with the d-lex package ( code.dl ang.org/ packages/d-lex), it works really well, unfortunately, it's quite slow for the number of lines I'm aiming to analyse (I did a test, for a million lines, it lasted about 3 minutes). To do so, I would write a parser by hand using the recursive descent algorithm, based on a stream of tokens. > For a project with good performance, I would need to be able to analyse text. On Fri, at 07:49:12PM +0000, vnr via Digitalmars-d-learn wrote:

0 kommentar(er)

0 kommentar(er)